Inevitable article about the AI revolution

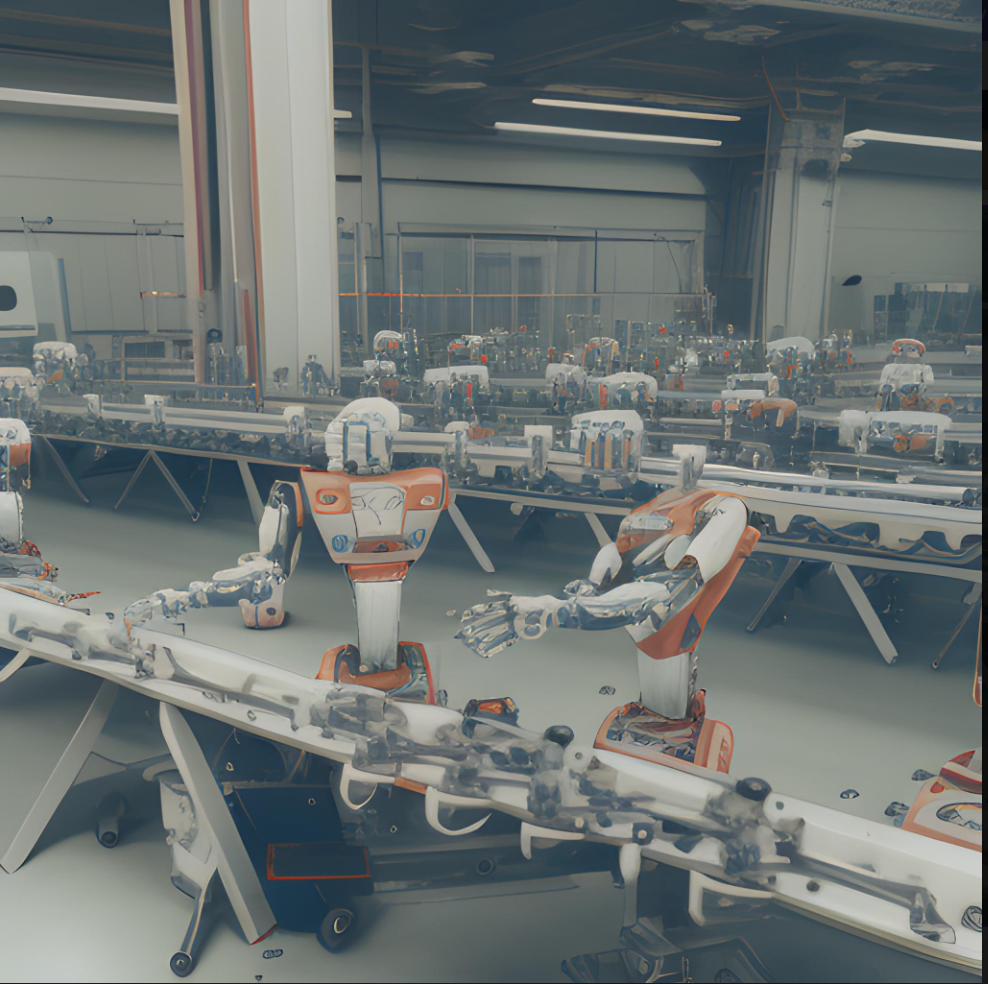

ChatGPT: In the rapidly evolving landscape of technology, Artificial Intelligence (AI) has emerged as a disruptive force set to revolutionize the manufacturing process. As the potential of AI continues to grow, it is transforming traditional manufacturing systems, fostering greater efficiency, productivity, and innovation.

Ah yes, somehow it feels almost inevitable that at some point I would write something about AI. Probably something about how it magically generates content on demand, or how you no longer need artists to create art. And although the steps forward in usability with the wider availability of tools like ChatGPT (large language model) and Midjourney (diffusion model) have made AI appeal to a much larger audience, these ‘innovations’ aren’t necessarily the ones I’m mostly looking forward to.

I feel like not enough people are talking about Iterative Design powered by Artificial Intelligence.

Customer-driven Agile Software Development

Or how I turned around the entire software development process in an organisation from ‘agile’ to agile. In a way that we could better serve our customers.

Pretty early in the creation of my current team, it was obvious we have a group of people with a pretty wide selection of skillsets that could all contribute to a variety of projects on our roadmap. It was very hard to get external and internal stakeholders to agree on urgency vs. priority and timelines. Then there was the additional complexity of external deadlines and revenue. If a customer is paying, or even has paid, for something to be developed and there is a timeline, does this mean it automatically takes priority over other things that SHOULD be taking precedent? Furthermore, is the decision with the technical team, or with someone else?

Scalable data processing

“I’ve heard you like scalability, so what about some scalability to scale your scalability?”

The use-case of this architecture is to provide a very scalable data processing pipeline that handles both authentication with the data provider and processing the data into flexible datasets. For the purpose of this specific setup, the consumption of the data itself (which is managed by a separate application) is outside of the scope.

In this specific setup we are talking about authenticating with web sockets , processing this data through an application layer and writing this data into either our Clickhouse or MongoDB datasets. Though there will not be much detail, I will describe all three layers individually.